RESHAPE17 | programmable skins

Project Description

Designers

METALLAXI

We live in an era of mass customization, people nowadays are more self-expressive and desire to customize and personalize all their objects. The heterogeneity of consumer needs has expanded and advancements in technology has allowed brands to experiment with new ways to satisfy the consumers need to personalize their product. Individuals have long expressed themselves through various forms of media such as the clothing they wear. Emerging technologies such as Augmented Reality (AR) has opened new doors in the field on Interaction Design and communicating with your garments. In the concept of AR and fashion, humans could potentially in the future use this technology to create an artificial second skin, communicating with your own body and designing your own personal garments, mixing the virtual and physical world.

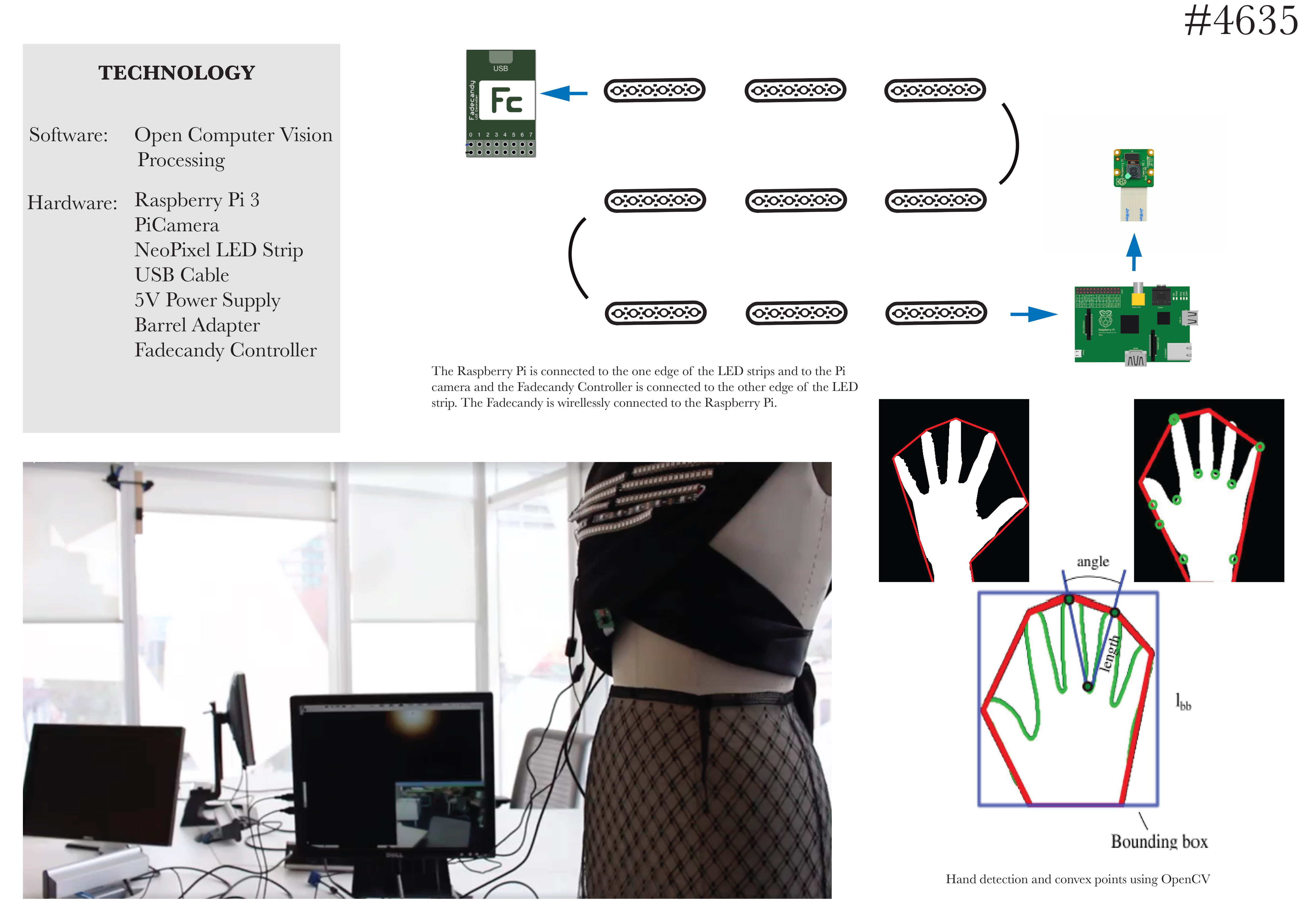

As a first approach towards that future, I designed and created an interactive shirt that allows a user to draw his or her personal designs on it with AR from a distance. Using Open Computer Vision (OpenCV), Python 2.7, a Raspberry Pi 3 and a Pi camera I was able to detect and track the user’s hand gestures and motion from a distance as far as 15 inches. By collecting all the tracking and motion data and using that as the input, I then used Processing, a software sketchbook and language to code within the context of the visual arts, NeoPixel LED strips and a Fadecandy Controller, a tool for creating interactive light art using addressable LED lighting, to create an interactive art and display the user’s drawing.

For the first version of this shirt I purchased 2 metres of woven fabric in order to make sure it was strong enough to support all the LEDs and power supplies, a Raspberry Pi 3, the latest version of the Pi camera, 1 strip of 100 NeoPixel LEDs, 1 USB cable, a soldering iron, a 5V power supply, a barrel adapter and a Fadecandy. The first steps were to connect the Pi to a computer screen, install the OpenCV software on the Raspberry Pi as well as Python 2.7 to code the entire script.

The first goal was to be able to get the Pi camera to identify a user’s hand, track and follow the motion and therefore take control over the mouse of the screen. That was achieved by combining different methods of image processing suggested by Adrian Rosebrock’s book “PyImageSearcher”. The Pi camera would identify the area where the hand was in the real-time video – the live video was shown on the computer screen to follow process – find the convex points of the hand, which in this case were the tips of the fingers and once the convex point was found a white circle would appear above the fingers on the video. Once that white circle appeared, your hand has control over the computer’s cursor.

The second step for the project was to install Processing on the Pi and learn how to code an interactive graphic design. For this prototype we made a color-changing sphere. The cursor would move the sphere around and make it change colors. The third step was to connect the Neopixel LEDs with the Pi and for that we used a Fadecandy which would translate to each LED what color to be. The initial idea was to use flexible LCD screens and be placed on the shirt, however to reduce the costs of this project the alternate solution was LED strips. Lastly, a pocket in the shirt was made to insert the Pi and all the LEDs were arranged in an array and connected to resemble a screen. The total cost of the project amounted to $325.

Stephania Stefanakou